How Reporting and Moderation On Social Media is Failing Marginalized Groups

Handling of online abuse often leads to *further* oppression of marginalized voices.

On July 19, a miracle took place on Twitter. After years of using the service to harass and abuse people, a man surely to go down in the annals of history as the one of the world’s biggest trolls, Milo Yiannopoulos was permanently banned from the site. After a sustained racist and sexist attack on actress Leslie Jones, the social media site finally pulled the plug on the man who makes his living spewing hate.

On the surface, this seems like a victory — but despite its positive outcome, it just points to the glaringly obvious fact that social media has a problem with racism. A quick look at the Community Standards or Guidelines for both Twitter and Facebook shows that they have rules in place against hate speech and harassing people based on race, gender, or religion and yet, Yiannopoulos was allowed to run rampant until this last incident. Despite tech companies’ supposed commitments to diversity, these guidelines continue to unfairly target people of color while also doing very little to punish people who target them.

The common response to these issues is a boilerplate statement: Social media is just racist. However, this simplification of the issue obscures what is actually a many-faceted problem, manifesting at multiple layers of how platforms conceptualize, define and respond to online hate.

First Stage: Policy

By their own admission, most of the staff, across the board, for Facebook and Twitter are both male and white, leaving the people in charge ill-equipped to determine what is or isn’t bigoted on their platform. As covered by many sources, White people, in general, have a very loose understanding of systemic issues and often take discussions of these issues as “reverse racism,” which means they are often poorly suited to make decisions about content that affects marginalized people. Due to the homogeneous nature of their employees, there are obvious missing spots in their policy procedures, simply because privilege means that you do not have to account for many of the various needs and cultural offenses that marginalized people of color experience. Though they may try to cover all bases and certainly have some level of understanding around racial issues, lacking the lived experience, their policies will only go so far.

The end result is policy that pays lip service to to these issues without taking any real stand on what is and isn’t hate speech. This leaves the definition, save for the most grievous of examples, open for some sort of interpretation of whether it’s hate speech or just freedom of expression. As Shanley Kane and Lauren Chief Elk-Young Bear have stated: “hundreds of people reporting explicit threats of violence to Twitter are told that it doesn’t represent a terms of service violation. Many forms of abuse that women experience constantly on the platform are not technically recognized under the Terms of Service…” While Twitter and Facebook ban, at a high level, things such as harassment, abusive messages, promoting violence and threats, it’s become very obvious that Twitter the platform has a very different definition of these terms than many of its most targeted users.

Second Stage: Reporting

While policy is one level of the issue, the other is users who are reporting content in the first place. Social media sites rely on their users to report; after all, due to the sheer amount of content, harnessing the people using the service as moderators makes better financial sense. As Jillian C. York writes, ““Community policing” is intended to be a trust-based system. But users are human, and bring their own biases and interpretations of community guidelines to their decisions about who and what to report.”

With a largely white userbase, this means that reports against pro-PoC pages are rampant, whether due to racist targeting or fundamental lack of understanding of the issues being discussed. Pages like Son of Baldwin or Kinfolk Kollective, both pro-Black and social justice, are often reported and suspended due to their content. On the other side of the coin, there are pages that post White Supremacist propaganda or clear hate speech that do not get removed once reported, seemingly because they simply don’t have the numbers thrown behind them that would trigger a closer investigation. Social media platforms say that the number of complaints doesn’t matter in their decision making, however, this doesn’t appear to be true in practice, as it is only after concerted efforts many pages or profiles are removed. Responding to one incident, Son of Baldwin wrote: “The long and the short of it is that Facebook is ill-equipped to deal with or understand anti-bigotry work. I would say that they treat anti-bigotry work as bigotry, but that would be misleading. Facebook actually privileges bigotry over anti-bigotry. Blatantly anti-black/anti-woman/anti-queer pages have been reported and Facebook has responded, repeatedly, with messages stating that those pages did not violate their policies.”

In order to create and execute policy more effectively, social platforms would need to take steps to address not just the content of their policies, but how policies and reporting mechanisms can themselves be weaponized by privileged users — particularly White users — against users of color.

Third Stage: Moderation

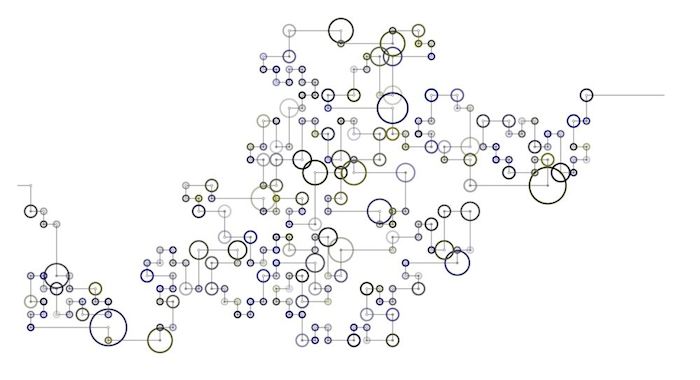

The nature of the reporting system means there are many reports that are simply “false positives” at best… merely people reporting things they don’t like or agree with (for example, pages devoted to Black Lives Matter or posts that speak on racial injustice). Due to the massive amount of complaints generated by users daily — Facebook has reported receiving 1 million user reports each day — many of them are pushed through an algorithm and deleted. There has been talk about using better algorithms to solve, in particular, issues such as incorrectly reported content from users: in fact, Yahoo has seen some innovation in this field that can catch hate speech 90% of the time. That 10%, however, may still unjustly capture people pages who are speaking academically about harassment and abuse. Further, this discussion brings us back to how these algorithms are designed… and who is designing them. As we see in other areas of tech, algorithms, like policy, often encode dominant patterns of power and marginalization.

No matter how advanced algorithms become, some complaints still need to be reviewed by a human being, and with the massive amount of reports, some is quite a bit. Often times, review of content is being conducted by contracted workers in the Philippines, India and other countries that US-based tech companies popularly outsource work to. As Adrian Chen highlighted in his report on the content moderators behind large social sites, these workers are responsible for working through a very large volume of complaints daily, must make often complex decisions about content in a short timeframe, are overwhelmingly under-paid, and subject to serious psychological stress associated with the nature of their work. As a first and critical step, creating better working conditions, more support and more empowerment for these workers is fundamental to having better and more just moderation processes on these platforms. Since contract workers used by tech companies both in the US and abroad often lack not only equitable treatment but a true voice and influence at tech companies, we have to ask if workers closest to these problems are truly given seats at the table as decision makers and influencers at major platforms.

Further, outsourcing geo-specific complaints to people in other locations as a blanket strategy might risk missing important context: while these workers are familiar with the politics, trends, news and context of their own cultural standpoint, they might lack the context to make effective decisions about content with another cultural context — such as US-based social movements like #BlackLivesMatter; though they have manuals that outline what is and isn’t considered banned content, the job entails a good deal of personal judgement. It would help if issues of social justice remained with those groups that have direct experience with them; certainly a great deal of problematic content (genitalia pics, violent imagery and video, etc.) can be handled universally, but for more nuanced reports (such as those around specific American anti-Blackness, for example), perhaps they should be seen by people who have lived the nuances. While many social media companies do route content which “requires greater cultural familiarity” to localized moderators, it still leaves unaddressed whether those moderators have the necessary context to make informed decisions… for example, will White moderators — even in the US — know enough about racial justice to make appropriate choices about #BlackLivesMatter content? In these cases, White privilege, racism and unconscious bias can absolutely affect the outcome.

Solving the Puzzle of Moderation

The consistent major issue we see in handling of online abuse is that it often leads to further oppression of marginalized voices. Fundamentally, the user base and administration of these sites are ill-equipped to address these issues, and their bias flows to people hired to process and make decisions about reported content. From start to finish of the moderation pipeline, the lack of input from people who have real, lived experience with dealing with these issues shows. Policy creators likely aren’t aware of the many, subtle ways that oppressive groups use the vague wording of the TOS to silence marginalized voices. Not having a background in dealing with that sort of harassment, they simply don’t have the tools to identify these issues before they arise.

The simple solution is adding diversity to staff. This means more than just one or two people from marginalized groups; the representation that would need to be present to make a real change is far larger than what exists in the population. Diversity needs to be closer to 50% of the staff in charge of policy creation and moderation to ensure that they are actually given equal time at the table and their voices aren’t overshadowed by the overwhelming majority. Diversity and context must also be considered in outsourcing moderation. The end moderation team, when it comes to social issues specific to location, context and identity, needs to have the background and lived experience to process those reports.

To get better, platforms must also address how user-generated reports are often weaponized against people of color. Although there’s nothing that can be done about the sheer numbers of majority-White users on platforms, better, clearer policy that helps them question their own bias would likely stop many reports from being generated in the first place. It may also help to implement more controls that would stop targeted mass-reporting of pages and communities by and for marginalized people.

Ultimately, acknowledging these issues in the moderation pipeline is the first step to correcting them. Social media platforms must step away from the idea that they are inherently “fair,” and accept that their idea of “fairness” in interaction is skewed simply by virtue of being born of a culture steeped in White Supremacy and patriarchy.