The Fantasy and Abuse of the Manipulable User

When mistreating users becomes competitive advantage.

In recent years, many anti-rape activists have rallied under the banner of “enthusiastic consent.”

Enthusiastic consent is not a legal rubric. Instead, it’s a tool that reframes consent as active – as more about “yes means yes” than “no means no.” Sex educator Heather Corinna emphasizes that consent is an “active process of willingly and freely choosing” the activity consented to. Enthusiastic consent is a cornerstone of an evolving movement to dismantle rape culture and supplant it with consent culture.

Rape culture is a wriggling, many-tentacled organism. One of the ways it operates is by suggesting that consent can be determined by anything other than a person’s specific “yes” – that short skirts connote consent, or that going up to someone’s apartment for coffee does. In these cases, rape culture falsely suggests that societal consensus regarding what those signifiers “should” indicate about consent is more powerful than actual consent or non-consent.

Another mechanism behind rape culture is the belief that sexual consent and non-consent exist on a different plane than violation of non-sexual boundaries. But in a consent culture, all individuals have the inalienable right to determine their own boundaries in all situations – and to change those boundaries for any reason at any time. In a consent culture, making assumptions about an individual’s boundaries is anathema. Building a consent culture is about more than sexual activity – it needs to pervade every aspect of our lives… including the parts of it that happen online.

Consent Culture at Work

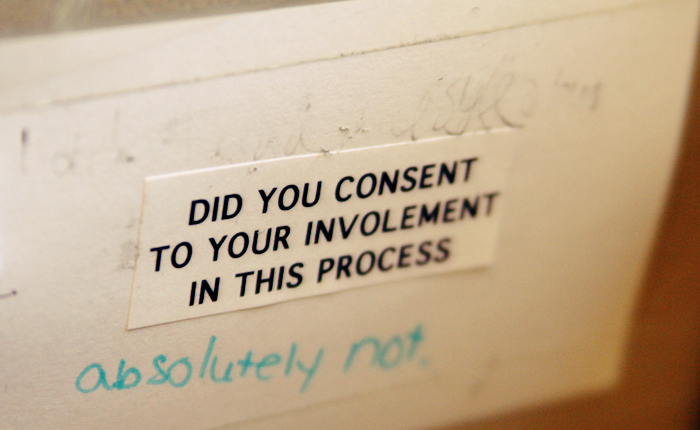

The tech industry does not believe that the enthusiastic consent of its users is necessary. The tech industry doesn’t even believe in requiring affirmative consent. Take e-commerce sites’ email newsletters. Almost every e-commerce site has one. Signup occurs on checkout – there’s a little checkbox above “submit order” that says something like, “Yes, please sign me up to receive special offers from FaintlyNerdyHipsterTShirts.com!” This checkbox near-universally comes handily pre-checked for the user.

Image, cropped and filtered, CC-BY-SA via nauright

The average user, who’s very task-oriented, will complete the minimum set of required fields and move on without considering whether they want to sign up for the newsletter. Assuming consent, rather than waiting for affirmative consent, allows websites to harvest emails at a far greater rate.

Once a newsletter signup has been achieved, multiple measures are taken to prevent unsubscribing. The American CAN-SPAM Act requires that commercial emails include information about opting out of future messages; this requirement is typically met with an unsubscribe link. Unsubscribe links are near-universally buried at the bottom of emails, in small print – and they often lead to pages that make it quite difficult to actually unsubscribe from any or all future emails. We see other examples across the web. Commercial websites capture analytics information about visitors without checking for affirmative opt-in first. Many also place tracking cookies and actively use information about other websites that people visit. Twitter, for example, uses this to tailor people-to-follow suggestions.

Other products go even further. In 2012, Path accessed its users’ address book contacts and used this information to send their friends promotional texts and phone calls – all without asking permission. The minor scandal that ensued does not erase the fact that someone at Path must have somehow thought this was a good idea – or the fact that Path continued to do this for at least six months after the scandal broke.

Deceptive linking practices – from big flashing “download now” buttons hovering above actual download links, to disguising links to advertising by making them indistinguishable from content links – may not initially seem like violations of user consent. However, consent must be informed to be meaningful – and “consent” obtained by deception is not consent.

Consent-challenging approaches offer potential competitive benefits. Deceptive links capture clicks – so the linking site gets paid. Harvesting of emails through automatic opt-in aids in marketing and lead generation. While the actual corporate gain from not allowing unsubscribes is likely minimal – users who want to opt out are generally not good conversion targets – individuals and departments with quotas to meet will cheer the artificial boost to their mailing list size.

These perceived and actual competitive advantages have led to violations of consent being codified as best practices, rendering them nigh-invisible to most tech workers. It’s understandable – it seems almost hyperbolic to characterize “unwanted email” as a moral issue. Still, challenges to boundaries are challenges to boundaries. If we treat unwanted emails, or accidentally clicked advertising links, as too small a deal to bother, then we’re asserting that we know better than our users what their boundaries are. In other words, we’re placing ourselves in the arbiter-of-boundaries role which abuse culture assigns to “society as a whole.”

Impacts on Experience

Casual deployment of so-called UX “greypatterns” and “darkpatterns” degrades the overall user experience of the web.

“Banner blindness” – the phenomenon in which users become subtly accustomed to the visual noise of web ads, and begin to tune them out – is a semiconscious filtering mechanism which reduces but does not eliminate the cognitive load of sorting signal from noise. Deceptive linking practices are intended to combat banner-blindness and increase exposures to advertising material. In doing so, they sharply increase the cognitive effort required to navigate and extract information from websites.

Similarly, bombardment with marketing emails requires that users take active measures to filter their incoming mail. The advent of machine-based filtering services like Unroll.me and GMail’s Inbox Tabs has made this process easier, but has not eliminated the time and energy drain of email filtering.

In contrast to the use of these anti-patterns, the mechanisms surrounding ToS acceptance prove that the industry understands how to obtain affirmative consent from users. It just mostly doesn’t bother. Website terms of service, and other software licenses, typically require that a user actively check an acceptance checkbox, or actively click an acceptance button, because legal precedent indicates that in the absence of affirmative consent ToSes are not a binding contract.

You must be this technical to withhold consent

The industry’s widespread individual challenges to user boundaries become a collective assertion of the right to challenge – that is, to perform actions which are known to transgress people’s internally set or externally stated boundaries. The competitive advantage, perceived or actual, of boundary violation turns from an “advantage” over the competition into a requirement for keeping up with them.

Individual choices to not fall behind in the arms race of user mistreatment collectively become the deliberate and disingenuous cover story of “but everyone’s doing it.”

Small end-runs around users’ affirmative consent set a disturbing precedent which the industry exploits with more explicit violations. For example, Twitter recently introduced photo tagging. All users with public Twitter accounts are, by default, taggable by any other Twitter user. Twitter didn’t initially choose to publicize information about these privacy settings, despite the obvious privacy and safety concerns posed by that default. Luckily, many Twitter users spoke up about this, and worked to inform others about how to change their privacy settings and protect themselves. Twitter eventually also spread information about this feature.

Rollouts like these that opt users into features tend to damage users unevenly; the less technical are the most affected, and users from marginalized communities are the most likely to be endangered by violations of their privacy – think of queer people who are outed to homophobic employers by accidentally-publicized Facebook photos, or stalking targets who need to lock down information about their physical location.

Image, cropped and filtered, CC-BY-SA via stevefaeembra

Many people outside the industry are aware that their software persistently and pervasively violates their boundaries, but feel powerless to stop it. Less technical users may not be aware of which information, or how much, they’re leaking to the industry with every click, or may not know to always double-check for privacy settings regarding their location. Even fairly privacy-conscious nontechnical users may simply not know about common anonymizing features like private browsing – much less know how to use heavy-duty privacy preservation software like TOR.

The hacker mythos has long been driven by a narrow notion of “meritocracy.” Hacker meritocracy, like all “meritocracies,” reinscribes systems of oppression by victim-blaming those who aren’t allowed to succeed within it, or gain the skills it values. Hacker meritocracy casts non-technical skills as irrelevant, and punishes those who lack technical skills. Having “technical merit” becomes a requirement to defend oneself online.

Gamification and gamified abuse

As the cliche goes, if you’re not paying for it, you’re not the customer, you’re the product being sold. However, paying customers are also often seen as a resource to be exploited. “Social gaming” and “free-to-play” gaming – as represented by Candy Crush Saga and innumerable Zynga games – use actively manipulative practices to coerce users into paying money for digital goods with no value outside the game’s context. Game mechanics are generally – and often deliberately – addictive, a fact which is used to entrap players as often as it’s used to bring them joy. “Social” games also recruit users’ friends – often without explicit permission – to keep users enmeshed in the manipulative context of the game.

Image, cropped and filtered, CC-BY via rjbailey

By deliberately instilling compulsive behavior patterns, exploitative gaming companies – like casinos before them – deliberately erode users’ abilities to set boundaries against their product. It’s easy to bash Zynga and other manufacturers of cow clickers and Bejeweled clones. However, the mainstream tech industry has baked similar compulsion-generating practices into its largest platforms. There’s very little psychological difference between the positive-reinforcement rat pellet of a Candy Crush win and that of new content in one’s Facebook stream.

Social media’s social-reinforcement mechanisms are also far more powerful. The “network effects” that make fledgeling social media sites less useful than already-dominant platforms also serve to lock existing users in. It’s difficult to practically set boundaries against existing social media products which have historically served one and one’s friends. People’s natural desire to be in contact with their loved ones becomes a form of social coercion that keeps them on platforms they’d rather depart. This coercion is picked up on and amplified by the platforms themselves – when someone I know tried to delete his Facebook account, it tried to guilt him out of it by showing him a picture of his mother and asking him if he really wanted to make it harder to stay in touch with her.

The fantasy of the manipulable user

I’ve been in meetings where co-workers have described operant conditioning techniques to the higher-ups, in those words – talking about Skinner boxes and rat pellets and everything. I’ve been in meetings where those higher-ups metaphorically drooled like Pavlov’s dogs. The heart of abuse is a fantasy of power and control – and what fantasy is more compelling to a certain kind of business mind than that of a placidly manipulable customer?

UX “darkpatterns” don’t always work. Most Farmville users are not hyper-profitable whales. It’s scary to deliberately cut oneself off from one’s support network when harassed online, but turning off one’s phone is nonetheless an option. Quora continues to struggle despite its endless innovations on the “darkpattern” front, and the gamification initiative that so excited my former VP couldn’t save a fundamentally ill-conceived and underfunded product. But the simplest of them do not take much finesse to effectively execute, and a nontrivial percentage of these techniques are research-based and will work on enough people to be worth it.

“Growth hacking” – traditional marketing’s aggressive, automated, and masculinely-coded baby brother – will continue to expand as a field and will continue to be cavalier-at-best with user boundaries. These practices will continue to expand in the hope of artificially creating viral hits. Rapidly degrading signal-to-noise ratios are already leading to an arms race of ever-louder, unwanted pseudo-social messaging; this trend will continue, to everyone’s detriment. Initial crude implementations of anti-consent UX will be slowly refined. Much science-fictional hay has been made of our oncoming privacy-free dystopia, but I’m more scared of behavior manipulation. Products already on the market promise to “gamify” our workdays. These products have been critiqued as destructive to intrinsic motivation – and furthermore, as too unrefined to be particularly effective – but some managers care far less about the intrinsic motivation of their workers than they do about compelling them to efficiency using any means available.

And what about a social network that you’re so deeply embedded in that leaving it stops being a feasible last resort if you’re made the target of harassment?

The requisite call to action

I call on my fellow users of technology to actively resist this pervasive boundary violation. Social platforms are not fully responsive to user protest, but they do respond, and the existence of actual or potential user outcry gives ethical tech workers a lever in internal fights about user abuse.

Image, cropped and filtered, CC-BY-SA via quinnanya

I call on my fellow tech workers to build accessible products and platforms which aid users’ resistance, to build tools which restore users’ ability to set and enforce granular electronic boundaries.

Whether these dystopic nightmares come upon us or not, the current situation – in which users’ consent is routinely violated, and in which these violations of consent are normalized into invisibility – is not acceptable in an ethical industry. I call on my fellow tech workers to actively resist any definition of “success” which requires them to ignore or violate users’ boundaries, and to build products that value enthusiastic consent over user manipulation.