The Hidden Dangers of AI for Queer and Trans People

The more we discuss the dangers of training AI on only small sets of data and narrow ideas about identity, the better prepared we will be for the future.

Technology has a long and uneasy relationship with gender and identity. On one hand, technologies like social media allow people to connect across large distances to share their personal stories, build communities and find support. Yet there have also been troubling cases, such as Facebook’s ‘Real Names’ policy where users have been locked out of their accounts and left without recourse. And routine smartphone updates that out trans people to their families, friends and coworkers.

As technology gets more complex and ubiquitous, these cases will continue to get worse. Widespread implementation of seemingly “objective” technology means less accessibility for those who do not fit developers’ conceptions of an “ideal user” – usually white, male and affluent. This is especially true with artificial intelligence (AI), where the information we teach AI directly influences the cognitive development of their machine brains.

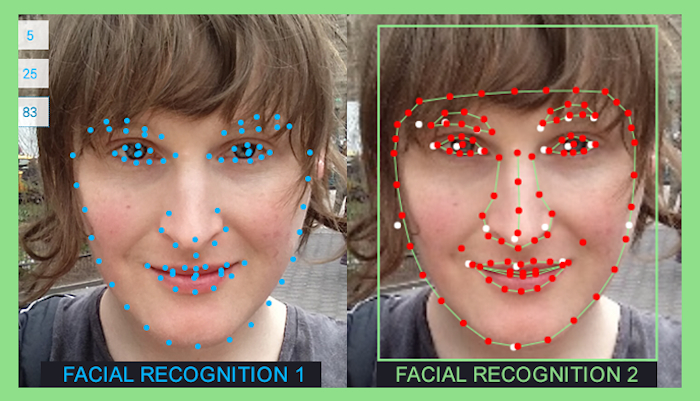

As the co-founder of an artificial intelligence startup, I work with this tech first hand and have seen how inaccurate it can be with people who don’t fit the developer’s “ideal user.” Facial recognition – a subset of machine vision – is a tangible example, capable of causing great harm when it tries to recognize people it has been taught little about. As a queer and trans identifying person, I can see how this lack of understanding could physically and psychologically endanger queer people as AI integrates with our lives.

Gender and Racial Bias

Facial recognition AI has been around for some time, but only recently has it been widely implemented due to better hardware and lower computing costs. Facebook’s photo tagging system – where photos uploaded are automatically tagged with your friends’ faces – is an example of a highly complex facial recognition AI which can not only recognize faces, but also connect the social relationships between the pictured face and your social network, to ask the seemingly simple question: “Is this your friend?”

Image created by the author.

How AI works tells us about how bias can be introduced into what is often assumed to be neutral technology. Imagine a library that’s just photographs of people’s faces, each sorted into groups such as “black hair”, “blue eyes”, “rounded nose”. Take these groups, and then sort them based on generalized patterns: for instance “blue eyes” is associated with the category of “caucasian.” What happens is that you get an AI intelligent enough to give a “read” and approximate an answer, but unable to deal with ambiguities and the spectrum of humanity’s natural diversity; for instance, a queer person might identify outside the gender binary, but is still put into a binary category by the machine.

Problems are also introduced in how AI is “taught” to recognize people: for example, if a computer is primarily trained on selfies of one race, it will be less accurate with selfies of other races. This is one reason why AI already expresses pervasive, endangering racial bias. According to a recent article in The Atlantic, “Facial-recognition systems are more likely either to misidentify or fail to identify African Americans than other races, errors that could result in innocent citizens being marked as suspects in crimes.” These problems extend to consumer products: just last year, Google Photos, using AI technology, tagged photos of Black users as “gorillas.”

As these identity recognition technologies become more ubiquitous, we’ll continue to see how lack of diverse data to train AI adversely affects marginalized groups as they are literally not represented in the AI’s understanding of the world. This has particular implications for trans, genderqueer and other gender non-conforming people. Imagine you’re at a restaurant, you’ve had a lot to drink and you need to go to the bathroom. As you walk down the corridor, a camera scans your face to unlock the women’s or the men’s bathroom door according to the gender it perceives you as.

Image created by the author.

This is like those bathrooms that have keypad locks on them at restaurants where you need to get the password from an employee to gain access. What happens if the camera can’t understand your gender identity and the bathroom door remains shut? What if neither door will open? What if the wrong one does?

It’s not too difficult to imagine this bathroom technology not just malfunctioning, but also being used to enforce laws such as the recently passed HB2 in North Carolina which bans trans people from using bathrooms in-line with their gender identity. Right now, when queer-appearing people are in front of a facial recognition video camera, these systems keep resonating between the two gender binaries. If applied to bathroom locks, AI technology could restrict access to anyone that falls outside of these cis and binary gender norms or assumptions. A related incident happened the other week at a UK McDonald’s, where a lesbian teen was kicked out of the restaurant for using the women’s restroom, because employees thought she was male. If everything becomes automated by machines that don’t allow space for ambiguity, where it’s only binaries, ones and zeroes, more incidents like this are inevitable.

Bathrooms aren’t the only place where trans and queer lives are in physical danger. For example, the healthcare industry may adopt AI sooner than most other professions. When you’re getting medical treatment with a trans/queer body now, doctors are overwhelmingly discriminatory. They’re not used to us, and not sure how to treat unfamiliarity. Many trans people are unable to get proper medical care, prescriptions and gender-affirming surgery as a result of this discrimination. If more and more medical processes are intermediated or administered by AI, this discrimination might get worse. If AI hasn’t been trained to understand and care for trans and queer bodies properly, it could be life-threatening.

AI might make it even more difficult for trans people to get jobs. In New York for instance, there are many buildings that require ID to gain access. Usually you provide your ID to security and they call or verify your name on a list. This routine job will probably be replaced by a machine soon. If your ID doesn’t match your current name, like mine, I tell security not to use the name on my card. This edge case is a very complex task for a machine to understand, if it will listen to you at all. At the very least, it would reveal your legal name, thus outing you to a potential employer before you even interview. Across these spaces — from public life, to healthcare, to the workplace — trans and queer people face myriad risks from AI technology; these risks are especially compounded for queer and trans people of color, already facing the dangers of racial bias in AI.

Addressing Machine Bias

Public domain image via bewildlife (border added).

Perhaps one way of creating a more encompassing AI is to have larger and more varied data sets. In Care of AI to Come, my co-founder and I discussed how caretaking AIs should be trained on large socially diverse data sets in order for AI to better represent all kinds of people. Such large socially diverse datasets could be made available through open-source and wiki databases for researchers and corporations to come together. Sharing local data and pre-trained models will greatly improve and allow for better AIs to be built.

But even then, this won’t solve AI’s issue with non-normative gender identity. Only people can define their gender, and machines should not interfere in the process. We don’t have to limit the potential of machines just because it’s easier for us to limit sorting in our own heads. Instead, we should teach machines ambiguity, queerness and fluidity when it comes to identity, not just limit it to restricted classifications. One way to do this in AI is by employing deep learning networks, which are good at adapting to new trends over time without actually needing to assign labels to what it’s interpreting. These ambiguous trends are represented as multi-dimensional data points called tensors, strung together to discover hidden meanings and relationships within the network.

Image created by the author.

Unsupervised learning algorithms that power tensor sorting such as K-means, allow identities to form organically as a limitless number of clusters. You can be a bit of this, and a bit of that and perhaps something else altogether new. For instance, if AI doctors are designed to treat all kinds of queer bodies and learn on the fly how to adapt to each patient’s individual needs while referencing similarly clustered past patients, then trans health would be more effective than it is currently, without needing to label what each person’s body represents.

During this deep learning process, AI can get confused with nuanced socially-acceptable situations and how to treat people respectfully. The AI should know when to ask for help. AI should be teamed up with humans to avoid issues and provide a guidance, like a parent or teacher teaching right and wrong. We’re still in the stage where we have control over what a machine learns, called “supervised learning” where we label categories for an AI to sort things it identifies.

Unsupervised learning is a major goal in AI right now, where the machine learns independently, from “input without labeled responses.” This means that it’s coming up with it’s own ideas about the world and what to do in it without human intervention. The AI dream of unsupervised bots is not here yet, but supervised learning is a good foundation to build toward it. We need to make sure this foundational layer is receptive and understanding of all identities.

The more we discuss the dangers of training AI on only small sets of data and narrow ideas about identity, the better prepared we will be for the future. Like most unaddressed issues surrounding representation of minority groups, it will take tangible and relatable problems that people are experiencing to truly understand that how we currently build AI is unsafe. We must express our concerns to ensure that our AI future is malleable and willing to listen and understand us, without judgement or discrimination. The promises of AI benefiting humanity should not outweigh the need to ensure that these benefits are equally distributed across all people, not just those people that fit into easy-to-sort categories for machine developers.