Social Media and Academic Surveillance: The Ethics of Digital Bodies

Now Twitter is the panoptic medium.

White media pundits and academics have a standard tactic: “Twitter is public.” Therefore, no one, and especially black women and other WOC, have rights or can complain about their digital bodies and intellectual property being taken without permission, plagiarized, used for media and academic data and news. This consistent appeal – “Twitter is public” – obscures the reality of Twitter as a digital publics, subject to the same problems of surveillance and ethics we find in geographical space.

In Mike Davis’s book City of Quartz, written before the LA Uprising of 1992, he discusses Los Angeles’s spatial panic around security. In a city lacking open public spaces, organized around the power of private property, and without a cohesive center, he writes:

The carefully manicured lawns of Los Angeles’s Westside sprout forests of ominous little signs warning: ‘Armed Response!’ Even richer neighborhoods in the canyons and hillsides isolate themselves behind walls guarded by gun-toting private police and state-of-the-art electronic surveillance. Downtown, a publicly-subsidized ‘urban renaissance’ has raised the nation’s largest corporate citadel, segregated from the poor neighborhoods around it by a monumental architectural glacis… In the Westlake district and the San Fernando Valley the Los Angeles police barricade streets and seal off poor neighborhood as part of their ‘war on drugs’. In Watts, developer Alexander Haagen demonstrates his strategy for recolonizing inner-city retail markets: a panopticon shopping mall surrounded by staked metal fences and a substation of the LAPD in a central surveillance tower…This obsession with physical security systems, and collaterally, with the architectural policing of social boundaries, has become a zeitgeist of urban restructuring, a master narrative in the emerging built environment of the 1990s. (Davis, 223).

Almost twenty-five years after the publication of this passage, these issues of surveillance and public spaces are now being translated into digital spaces, particularly Twitter.

Photo CC-BY Kayla Johnson, filtered.

Michel Foucault first described and theorized the panopticon in Discipline and Punish: The Birth of the Prison. He writes that the “panopticon” is about discipline and control, based on Jeremy Bentham’s nineteenth-century design of an “ideal prison.” Foucault’s point was to consider how power, surveillance, and control worked within this architectural “ideal.” In placing the object of the panoptic gaze under surveillance at all times, the goal was “to induce in the inmate a state of conscious and permanent visibility that assures the automatic functioning of power.”

As Davis’ City of Quartz makes clear, the panoptic gaze polices, surveys, and targets minority bodies the most–especially black women and other WOC. Today, we see the same pattern playing out against digital bodies. But now Twitter is the panoptic medium.

Digital Bodies Moving Through Digital Public Spaces

We know from #RaceSwap experiments that Twitter as a medium views digital bodies as extension of physical bodies. #RaceSwap was an experiment in which several prominent black women and WOC social media activists switched their avatars to white men, concomitant with several white men allied to these black female and WOC activists switching their avatars to pictures of black women and other WOC. The amount of abuse and trolling dramatically decreased for black women and other WOC activists, while increasing for their white, male counterparts.

All this happened without either group changing anything else about their Twitter presentation or speech. Race, gender, ability, sexuality are just as marked on digital Twitter avatars as they are in real physical interactions. If Twitter, inhabited by digital bodies, functions as a digital public space, then the affordances of public spaces must be respected. People in public spaces are allowed to protest, hang out with friends, watch and participate in public lectures and performance art events. If Twitter is Times Square, then the media, academia, and institutions (government, non-profit, etc.) must respect that Twitter users have a right to talk to their friends on the Twitter plaza without people recording them, taking pictures of them, using their digital bodies as data without permission, consent, conversation, and discussion. As Eunsong Kim and I have discussed in the #TwitterEthics Manifesto: “In the end, the work, the credit, the compensation, and the view need to be a shared, collaborative process.” Particularly with academics, this issue of data ethics needs to be addressed now. Even feminist theory has begun to call for the end of the hierarchical subject/object split, particularly in the recent Material Feminisms. Feminist quantum physics theorist Karen Barad has already poked holes in Foucault’s rigid panopticon in which watchers do not touch the watched and vice versa. The time to break this cycle is now.

![]()

Photo CC-BY jiattison, filtered.

Digital Bodies and the Ethics of Data

One recent event that raised questions around academic surveillance is the Annenberg USC study on Black Twitter. The study’s main goal was to “focus more narrowly on the relationship between popular culture and civic participation in some parts of Black Twitter.” Their site describes how they hosted several events at USC for live-tweeting Scandal, but also “collected publicly-accessible tweets and metadata that includes terms related to Scandal…” They note “these tweets are never made available to anyone outside of our research team, nor do we publish any information about the people who composed these messages.”

Yet from the explanation of their project, it is unclear if the project has asked for consent from the individuals whose tweets were collected. Had the project gone through an Internal Review Board? (Though USC’s IRB standards do not address digital platforms in any way.)

There was a large wave of anger over what could be gleaned about the study through its website. Because the study originally showed images of three white, male academic project organizers, the power dynamics appeared to show a group of white, privileged, male academics using without consent, discussion, collaboration, or compensation the data of a huge number of digital black bodies for an academic study. Following significant public response, Dayna Chatman –a black, female graduate student at USC who led the study, but whose name was originally not visible on the project’s website – spoke about the backlash: “I did not approve the description of the project that was on the Annenberg Innovation Lab website. It does not fully encapsulate the scale, methods, or full reasoning behind the project.”

At least currently, no additional information is available about the project’s methodology and its ethics statements in regards to acquiring data from individual tweeters. Regardless, even if this information is eventually disclosed, it seems clear that Dayna Chatman’s USC academic adviser on this project — a white man — did not have the contextual and historical background to understand the long history of minorities and especially black men and women being used as data and experimental bodies for research and scientific experiments. Yet unlike many other one-directional academic studies, the twist in Twitter’s panopticon is that the “subjects” under study talk back to the researchers: #BlackTwitterStudyResults was created as a response and cycled through Black Twitter as a way to speak back to what was seen as problems in the study.

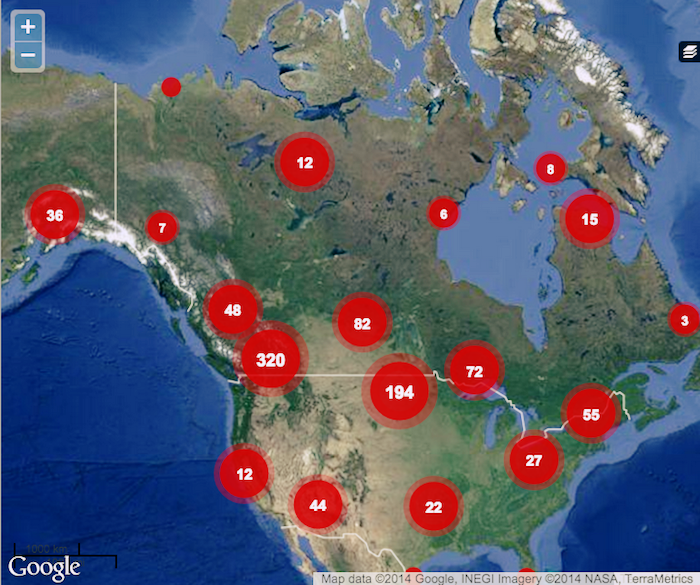

#GiveItUpCCA

Screenshot from the Save Wįyąbi Project.

Another recent example that raises questions of academic surveillance and ethics is the controversy over California College of the Arts’ student project “Cohere”: a digital website and map about missing Indigenous women in the US and Canada. Lauren Chief Elk, one of the creators of the Save Wįyąbi Mapping Project, writes about how Cohere plagiarized their site and data:

“On July 12, 2014 it was brought to our attention that a group of students (Cohere) at the California College of Arts took some of the Save Wįyąbi Project’s work and passed it off as their own for a grant. The work is our interactive map and database: missingsisters.crowdmap.com.”

At the bottom of the Save Wįyąbi Mapping Project is a very clear statement about academic citation and credit: “if you borrow content for your unpaid grassroots community work, we don’t mind at all but please track back and/or link to our work for proper credit and referencing. If you duplicate our work for academic credit or paid projects where you receive private (foundation, crowdfunding etc.) or government money or grants for your work you MUST request direct permission from Save Wįyąbi academic researchers to duplicate or re-map these works in any form.”

The site explicitly lays out the procedures to use and credit this incredible digital mapping site. Yet it was summarily disregarded and disrespected by the California College of Arts. As Chief Elk writes about her experiences confronting the organization:

When I was able to reach members of the administration they responded with ‘plagiarism aside’ and ‘putting plagiarism aside’ to my concerns and complaints of the stealing. They also said this was explicitly not about the actual cases or data, and that it was just ‘storytelling’- which was the point they reiterated when deciding it was not plagiarism by their standards (which they subsequently deleted from their website). To the contrary, the lead organizer of this storytelling project openly stated that this was about data collecting, data retaining, and database building. So we think that they not only copy and pasted our layout, design, language, functions, and entire purpose, but that they possibly pulled our cases and data. However we’re not entirely sure because since this was brought forward their entire online presence has evaporated.

The controversy gets further complicated because as an oral history project, a ‘storytelling’ project, the CCA graduate student project should have been under IRB review at the very least. Other academics, in fact, do use IRB reviews or even more extensive informed consent models in order to do data analysis work on social media. One can look at the work of Zeynep Tufekci on the Arab Spring for a discussion of both ethical theory and practice. So the question remains: why does it appear that women of color particularly do not get the same level of ethical consideration? What methodologies, ethics, and responsibilities were the institution and advisors using when they seem to have agreed to the harvesting of digital data without consent or citation? As Chief Elk makes clear in her tumblr post, this continues a long history of violence against Native American bodies and particularly women’s bodies.

The response on Twitter to revelations around the CCA plagiarism was to start the #GiveItUpCCA campaign. Here again, the objects of study, the voices of WOC and black women are speaking back to the academic complex and calling out the harvesting of their digital work, digital bodies, and digital intellectual property.

The Cohere website has disappeared following online organization… but not before winning an IMPACT Social Entrepreneurship award from the College in April.

Digital Data and Digital Bodies: Ethics and the Immortal Cells of Henrietta Lacks

If one imagines that digital bodies are extensions of real bodies, then every person’s tweets are part of that “public” body. By harvesting digital data without consent, collaboration, discussion, or compensation, academics and researchers are digitally replicating what happened to Henrietta Lacks and her biological cells.

Henrietta Lacks was a working-class African-American woman from Baltimore who had her cervical cancer cells taken without her permission or knowledge during a medical exam. The HeLa cell line became the first human cells to be replicated in a lab and have “contributed to the development of a polio vaccine, the discovery of human telomerase and countless other advances.” Henrietta Lacks died of cancer and her family did not know that her cells had been harvested for biomedical research until 1973. In 1971, Obstetrics and Gynecology identified Henrietta Lacks as the HeLa cell source. Henrietta Lacks’ family found out about their mother’s cells in 1973 and scientists, without obtaining consent or explaining the ramifications, proceeded that year to collect the family’s blood samples for biomedical research.

Written by Rebecca Skloot in collaboration with the Lacks family, The Immortal Life of Henrietta Lacks was published in 2010 – an investigative book that considered the ethical ramifications, the questions of intersectional race and gender, and the unpermitted replication of a black woman’s body without consent, compensation, acknowledgement, citation, or collaboration. Even after its publication, academic journal articles continue to expose the family’s identity and lay open Henrietta Lacks’ entire genomic history. In fact, in 2013, the HeLa genome sequence was published without the permission or knowledge of the family in Genes, Genomes, Genetics.

In September, several news stories updated the ongoing saga as the NIH changed its rules on data sharing, consent, and ethics in light of all that happened to Henrietta Lacks and her cancer cells. Around the same time as the USC #BlackTwitterStudyResults were cycling through the Twitter timelines, news items in Scientific American and Nature made it into mainstream media to discuss precisely the ethical implications of what has been called “informed consent.”

“Informed Consent” is a complete overhaul of previous NIH policies about scientific research and the use of human biological subjects… “not just for genomic data, but also for cell lines or clinical specimens such as tissue samples, even when they are stripped of information that directly identify the source.” This new practice will require academic scientists to explain the long-term use of biological samples in future research and the ramifications of what that would mean (including the possibilities of a loss of anonymity and privacy). While this is an important step, academia on an institutional level needs to look at their policies to make sure that digital bodies are given the same consideration of informed consent as real bodies are now supposed to for biomedical research.

Minority Bodies and Consent

Photo CC-BY Umberto Salvagnin, filtered.

The scientific and academic history of disregarding rights and ethics in relation to the bodies of minorities and especially women of color is seen in other high profile lawsuits. For example, Nature has reported that “the use of patients’ biological information for previously undisclosed purposes has been the subject of several high-profile cases.” There is the incredibly famous case of the Tuskegee Syphilis Study in which 600 black men were used as medical subjects to study syphilis without consent, information, and even medical treatment for their actual illnesses. The article “US agency updates rules on sharing genomic data” describes yet another case: “In 2010, the Havasupai tribe of Arizona won a US $700,000 settlement against Arizona State University when blood samples originally provided for a study on diabetes were used in mental-illness research and population studies.”

More recently, and in relation to digital bodies, there has been a major ethical and procedural outcry about Facebook’s experimentation with users in relation to Facebook posts and mood. As this article discusses, there were major violations of IRB and ethics that even when dealing with a corporation and the authoritative journal the Proceedings of the National Academy of the Sciences of the United States (funded by the US Government), the consent of any of the users in the experiment (over 600,000 unsuspecting Facebook users) in order to research mass-scale “emotional contagion” sounds straight out of a twisted dystopian movie.

So is it any surprise that academics are looked upon as part of the academic-industrial-government-military panopticon experimenting (without permission or consent) on their public citizens? Why should digital bodies on Twitter “trust” academics and their ethics and methodologies when the methodologies and ethics (particularly of government-funded and thus government-vetted projects) continue to think of Twitter as a way to enact surveillance on their future research subjects?

Though certain academic areas and circles are fighting to decolonize academia and particularly digital research, ethical methodologies that constantly consider how projects must be created, credited, and used must be required practice for all academics. On Friday, October 3, 2014, at the University of Michigan, there was an entire conference devoted to Data, Social Justice, and Digital Humanities (#DSJandH). In it, Moya Bailey delivered a talk on “Doing DH Justly: Feminist Ethics in Social Media Research” in which she pushes back on ideas of “IRB paternalism” and calls for research as a social justice act. She also directs attention to the DataCenter Research for Justice that has worked with communities for decades. There are academic methodologies being theorized and practiced, so the question is: why has it not become an academic standard across the board?

Twitter — as a digital panopticon with a twist — means that even though much of the negotiation of power, surveillance, and control functions within the theoretical frameworks set out by Foucault and Surveillance Studies at large, digital bodies on Twitter speak, protest, and fight back.

This is how academics need to understand Twitter: a multivalent, rhizomatic platform with voices that form communities, conversations, and can talk back or even shout back if they so choose. Twitter as a public space does not mean academics have permission to make digital bodies data points or experimental cells in a petri dish. Unlike the original idea of the panopticon in which those under surveillance had no option to see or speak back to the people watching them, Twitter allows these social spatial walls to break and for the digital bodies or “objects” of an academic study to speak back, protest, and create ethical disruptions.